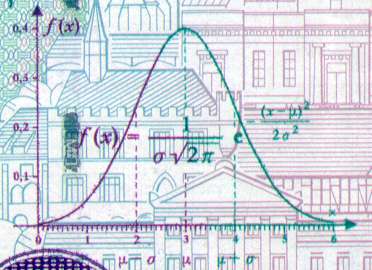

German 10 DM bank note with Gauss curve in the center

Back to the Homepage - Hans-Georg Michna

Transition

Artificial Life and Hyperintelligence

Copyright © 2001-2023 Hans-Georg Michna.

Table of Contents

Present Knowledge

and Speculation

Instinct and Intelligence

Humans and Robots

Breakout

Last edited on 2016-09-21. The original manuscript of the lecture held at the TransVision 2001 conference in Berlin, Germany, transition.doc, is also still available here.

Some people assume that we will inevitably create artificial intelligence that is higher than ours and will ultimately supersede us, possibly within the next 20 years, almost inevitably within the next 100. This paper analyzes potential structures of hyperintelligent artificial life and looks at possible scenarios for the transition from human to posthuman rule.

Some topics are differences between human and artificial thinking, the conflict between the wish to raise artificial intelligence levels and the danger of creating artificial hyperintelligent life forms, ways in which hyperintelligent computers could acquire an expansion instinct, and the dangers we face after we have, intentionally or accidentally, created artificial life.

If humanity created a superior life form, leading to a superior civilization that transcended our planetary limits, should we fear or welcome this development? Should we welcome it even if it could lead to our own demise? This article argues that the development is unavoidable and any attempts to stall the process are ultimately bound to fail.

A human being is a computer's way of making another computer.

Yes, we are their sex organs.

Solomon Short

To gain firm and proven knowledge, we normally use the scientific method. It consists of creating hypotheses, then using experiments and modelling to support or refute these hypotheses. In a social process of experimenting, comparing, writing and discussing we move ever closer to the truth and gain detailed knowledge about the world we live in.

As much as I wish to be able to apply the same proven methods to the problem discussed in this paper, this is currently not possible. We cannot study the future as precisely as we can study, for example, an elephant, for the simple reason that the future does not exist yet. As we observe ever more rapid technological change, we lose the ability to predict anything but the nearest future with any high precision.

Moreover, the history of science is littered with grand predictions that turned out to be grossly overestimated. Many of them never came true at all, even though they might have been feasible in principle. If I now make a prediction even grander than anything predicted before, like the end of the human era and the rise of a new civilization, a superficial reader will conclude that I will very likely fall into the same, well-known trap.

Does this mean we should give in and desist? No, because there is no less at stake than the future of mankind, and one distinct possibility is the end of the human era. Therefore we have to try our best to investigate what is going to happen and what new developments may be coming our way.

We have to do this in spite of its highly speculative nature. We have a duty to alert mankind to the hardly believable opportunities, but also to the grave dangers we will be facing in the coming years. We have to do this even if we cannot be sure of anything a few years hence. And to do it we need all the existing intelligence we can muster, but also creativity and even phantasy, as mere analysis will not help us predict the unpredictable.

These are a few pieces of literature that touch some aspects of our question. Literal quotations are presented in small print.

From Wikipedia: Joseph Jules François Félix Babinski (17 November 1857 – 29 October 1932) was a French neurologist of Polish descent. He is best known for his 1896 description of the Babinski sign, a pathological plantar reflex, indicative of corticospinal tract damage.

In 1932, the last year of his life, Joseph Babinski wrote an intriguing letter to his friend, the Portuguese physician Egas Moniz. The letter is riddled with doubt—not just about interpreting experience, but also about the value of knowledge itself. Excerpt:

… In the present circumstances, in the middle of so many tragic events, one may also wonder if science deserves to be the object of a cult. The most admirable creations of the human mind, contrary to all expectations, have had as their main effect destruction and massacre; with a bit of pessimism, one may curse advances in knowledge and fear that someday some discovery might have as a consequence the destruction of mankind …

From Egas Moniz, “Dr. Joseph Babinski,” Lisboa Medica 1932, as quoted in Jacques Philipon and Jacques Poirier, Joseph Babinski: A Biography, Oxford University Press, 2008. Egas Moniz won the Nobel Prize in 1942 for the development of the prefrontal lobotomy and later died from injuries inflicted by a mental patient he had operated on.

Isaac Asimov's ethical rules for robot behavior, from Handbook of Robotics, 56th Edition, 2058 A.D., as quoted in "I, Robot" (1950):

Asimov claimed that the Three Laws were originated by John W. Campbell in a conversation they had on December 23, 1940. Campbell in turn maintained that he picked them out of Asimov's stories and discussions, and that his role was merely to state them explicitly.

Unfortunately we have never paid any attention to these laws, particularly when it comes to military robots (beginning with cruise missiles).

Gordon Moore, one of the founders of Intel Corp., found in 1965 that the densitiy of the elements on a computer component like a semiconductor chip doubles every year and later corrected the figure to two years.

The figure keeps changing and will surely be reviewed and adjusted with increasing attention over the coming years and decades. Currently a fair estimate is a doubling every 18 months. It remains to be seen for how much longer Moore's Law will hold before progress bumps against fundamental physical limits, but many previous predictions of its demise have so far always been rolled over.

Similar laws also seems to hold for several other technological parameters, such as the capacity of storage devices.

Further reading: Was Moore's Law Inevitable?

Original article: The Singularity. Citation:

From the human point of view this change will be a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control. Developments that before were thought might only happen in "a million years" (if ever) will likely happen in the next century. (In [5], Greg Bear paints a picture of the major changes happening in a matter of hours.)

I think it's fair to call this event a singularity ("the Singularity" for the purposes of this paper). It is a point where our old models must be discarded and a new reality rules. As we move closer to this point, it will loom vaster and vaster over human affairs till the notion becomes a commonplace. Yet when it finally happens it may still be a great surprise and a greater unknown. In the 1950s there were very few who saw it: Stan Ulam [28] paraphrased John von Neumann as saying:

One conversation centered on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

Von Neumann even uses the term singularity, though it appears he is thinking of normal progress, not the creation of superhuman intellect. (For me, the superhumanity is the essence of the Singularity. Without that we would get a glut of technical riches, never properly absorbed … .)

It needs to be mentioned that exponential growth does not mathematically lead to a singularity, so the expression is somewhat displaced here, but it has become quite widespread, particularly since Ray Kurzweil (see below) adopted it and spread it further.

Kevin Warwick worked in the artificial intelligence lab of the Massachusetts Institute of Technology (MIT). A citation of the end of the last chapter: "Mankind's Last Stand?" Citation:

… There appears to be absolutely nothing to stop machines becoming more intelligent, particularly when we look towards an intelligent machine network. There is no proof no evidence, no physical or biological pointers that indicate that machine intelligence cannot surpass that of humans. Indeed, it is ridiculous to think so. All the signs are that we will rapidly become merely an insignificant historical dot.

It looks unlikely that we will see humanoid robots which are roughly equivalent to or which even replicate humans. There are considerable technical difficulties with this, and little or no driving force. But we cannot conclude that, because machines are unlikely to be approximately equivalent to humans, they will always be subservient to us. In fact, the converse is true: it is because they are different, because they have distinct advantages, many of which we know about already, that machines can be better than we are. In this way they can dominate us physically through their superior intelligence.

The human race, as we know it, is very likely in its end game; our period of dominance on Earth is about to be terminated. We can try and reason and bargain with the machines which take over, but why should they listen when they are far more intelligent than we are? All we should expect is that we humans are treated by the machines in the same way that we now treat other animals, as slave workers, energy producers or curiosities in zoos. We must obey their wishes and live only to serve all our lives, what there is of them, under the control of machines.

As the human race, we are delicately positioned. We have the technology, we have the ability, I believe, to create machines that will not only be as intelligent as humans but that will go on to be far more intelligent still. This will spell the end of the human race as we know it. Is that what we want? Should we not at least have an international body monitoring and even controlling what goes on?

When the first nuclear bombs were dropped on Japan, killing thousands of people, we took stock of our actions and realised the threat that such weapons posed to our existence. Despite the results achieved by the Hiroshima and Nagasaki bombs, even deadlier nuclear bombs have been built, much more powerful, much more accurate and much more intelligent. But with nuclear weapons we saw what they could do and we gave ourselves another chance.

With intelligent machines we will not get a second chance. Once the first powerful machine, with an intelligence similar to that of a human, is switched on, we will most likely not get the opportunity to switch it back off again. We will have started a time bomb ticking on the human race, and we will be unable to switch it off. There will be no way to stop the march of the machines.

Citation from the chapter: "Building New Brains …"

Taking all of this into consideration, it is reasonable to estimate that a $1,000 personal computer will match the computing speed and capacity of the human brain by around the year 2020 … Supercomputers will reach the 20 million billion calculations per second capacity of the human brain around 2010, a decade earlier than personal computers.

See also references to his book: "The Singularity Is Near" at: www.kurzweilai.net

Computer Scientist; Artificial Intelligence Pioneer, Stanford University

Cited from Edge, The World Question Center 2005:

I think human-level artificial intelligence will be achieved.

Cosmologist, Cambridge University; UK Astronomer Royal; Author, Our Final Hour

Cited from Edge, The World Question Center 2005, bold emphasis added:

I believe that intelligent life may presently be unique to our Earth, but that, even so, it has the potential to spread through the galaxy and beyond—indeed, the emergence of complexity could still be near its beginning. If SETI searches fail, that would not render life a cosmic sideshow Indeed, it would be a boost to our cosmic self-esteem: terrestrial life, and its fate, would become a matter of cosmic significance. Even if intelligence is now unique to Earth, there's enough time lying ahead for it to spread through the entire Galaxy, evolving into a teeming complexity far beyond what we can even conceive.

There's an unthinking tendency to imagine that humans will be around in 6 billion years, watching the Sun flare up and die. But the forms of life and intelligence that have by then emerged would surely be as different from us as we are from a bacterium. That conclusion would follow even if future evolution proceeded at the rate at which new species have emerged over the 3 or 4 billion years of the geological past. But post-human evolution (whether of organic species or of artefacts) will proceed far faster than the changes that led to emergence, because it will be intelligently directed rather than being—like pre-human evolution—the gradual outcome of Darwinian natural selection. Changes will drastically accelerate in the present century—through intentional genetic modifications, targeted drugs, perhaps even silicon implants in to the brain. Humanity may not persist as a single species for more than a few centuries—especially if communities have by then become established away from the earth.

But a few centuries is still just a millionth of the Sun's future lifetime—and the entire universe probably has a longer future still. The remote future is squarely in the realm of science fiction. Advanced intelligences billions of years hence might even create new universes. Perhaps they'll be able to choose what physical laws prevail in their creations. Perhaps these beings could achieve the computational capability to simulate a universe as complex as the one we perceive ourselves to be in.

My belief may remain unprovable for billions of years. It could be falsified sooner—for instance, we (or our immediate post-human descendents) may develop theories that reveal inherent limits to complexity. But it's a substitute for religious belief, and I hope it's true.

Physicist, Computer Scientist; Chairman, Applied Minds, Inc.; Author, The Pattern on the Stone

Cited from Edge, The World Question Center 2005, bold emphasis added:

I know that it sounds corny, but I believe that people are getting better. In other words, I believe in moral progress. It is not a steady progress, but there is a long-term trend in the right direction—a two steps forward, one step back kind of progress.

I believe, but cannot prove, that our species is passing through a transitional stage, from being animals to being true humans. I do not pretend to understand what true humans will be like, and I expect that I would not even understand it if I met them. Yet, I believe that our own universal sense of right and wrong is pointing us in the right direction, and that it is the direction of our future.

I believe that ten thousand years from now, people (or whatever we are by then) will be more empathetic and more altruistic than we are. They will trust each other more, and for good reason. They will take better care of each other. They be more thoughtful about the broader consequences of their actions. They will take better care of their future than we do of ours.

The Unabomber

From his manifesto. (Note that this is not a general endorsement of the manifesto or any of the Unabomber's deeds.)

But we are suggesting neither that the human race would voluntarily turn power over to the machines nor that the machines would willfully seize power. What we do suggest is that the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines' decisions. … Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently. At that stage the machines will be in effective control. People won't be able to just turn the machines off, because they will be so dependent on them that turning them off would amount to suicide.

Read this web page: http://www.hawking.org.uk/life-in-the-universe.html. Citation:

Laws will be passed, against genetic engineering with humans.

But some people won't be able to resist the temptation, to improve human

characteristics, such as size of memory, resistance to disease, and length of

life. Once such super humans appear, there are going to be major political

problems, with the unimproved humans, who won't be able to compete. Presumably,

they will die out, or become unimportant. Instead, there will be a race of

self-designing beings, who are improving themselves at an ever-increasing rate.

If this race manages to redesign itself, to reduce or eliminate the risk of

self-destruction, it will probably spread out, and colonise other planets and

stars. However, long distance space travel, will be difficult for chemically

based life forms, like DNA. The natural lifetime for such beings is short,

compared to the travel time. According to the theory of relativity, nothing can

travel faster than light. So the round trip to the nearest star would take at

least 8 years, and to the centre of the galaxy, about a hundred thousand years.

In science fiction, they overcome this difficulty, by space warps, or travel

through extra dimensions. But I don't think these will ever be possible, no

matter how intelligent life becomes. In the theory of relativity, if one can

travel faster than light, one can also travel back in time. This would lead to

problems with people going back, and changing the past. One would also expect to

have seen large numbers of tourists from the future, curious to look at our

quaint, old-fashioned ways.

It might be possible to use genetic

engineering, to make DNA based life survive indefinitely, or at least for a

hundred thousand years. But an easier way, which is almost within our

capabilities already, would be to send machines. These could be designed to last

long enough for interstellar travel. When they arrived at a new star, they could

land on a suitable planet, and mine material to produce more machines, which

could be sent on to yet more stars. These machines would be a new form of life,

based on mechanical and electronic components, rather than macromolecules. They

could eventually replace DNA based life, just as DNA may have replaced an

earlier form of life.

This mechanical life could also be self-designing.

Thus it seems that the external transmission period of evolution, will have been

just a very short interlude, between the Darwinian phase, and a biological, or

mechanical, self design phase. …

His web site is at http://www.simulation-argument.com/. Citation from the Original article:

Abstract: This paper argues that at least one of the following propositions is true: (1) the human species is very likely to go extinct before reaching a "posthuman" stage; (2) any posthuman civilization is extremely unlikely to run a significant number of simulations of their evolutionary history (or variations thereof); (3) we are almost certainly living in a computer simulation. It follows that the transhumanist dogma that there is a significant chance that we will one day become posthumans who run ancestor-simulations is false, unless we are currently living in a simulation. A number of other consequences of this result are also discussed.

The paper raises the question why we are dealing with the singularity and whether it makes sense to think about the transition at all. The transition may even end the simulation, perhaps because it introduces a lot more complexity that exceeds the simulation limits. But actually the question whether we live in a simulation does not matter all that much, because we should strive to improve our situation anyway, and exactly because the question is unanswered. We may not live in a simulation after all.

After we made the first machines, some people concluded that the whole world is essentially a machine and we humans are also some kind of machines. It is only to be expected that, after we invented a more sophisticated machine, the computer, the thought arises that the whole world could be a computer, simulating the world as we see it, including ourselves.

In all these hypotheses there may well be some or even a lot of truth, but we have to be careful not to limit our conclusions to the current limits of our ability to build something. It is also possible that the world has a more complex basis that we have yet to discover, layer by layer.

Nonetheless the hypothesis of physics being based on something that resembles a computer more than a set of mathematical equations is highly interesting and worth pursuing further.

In his original article he explains a consequence of the Fermi Paradox:

Humanity seems to have a bright future, i.e., a non-trivial chance of expanding to fill the universe with lasting life. But the fact that space near us seems dead now tells us that any given piece of dead matter faces an astronomically low chance of begating such a future. There thus exists a great filter between death and expanding lasting life, and humanity faces the ominous question: how far along this filter are we?

Combining standard stories of biologists, astronomers, physicists, and social scientists would lead us to expect a much smaller filter than we observe. Thus one of these stories must be wrong. To find out who is wrong, and to inform our choices, we should study and reconsider all these areas. For example, we should seek evidence of extraterrestrials, such as via signals, fossils, or astronomy. But contrary to common expectations, evidence of extraterrestrials is likely bad (though valuable) news. The easier it was for life to evolve to our stage, the bleaker our future chances probably are.

Several billion years ago somewhere on planet earth a self-replicating molecule came into being. This was the beginning of life, possibly the hardest part. From then on evolution made sure, through trial and error, that the self-replicating entities got better and better at replication, until quite complex mammals inhabited the planet.

However, even complex mammals have very little rational intelligence, with the possible exception of the large apes, elephants, and whales (dolphins). All others are exclusively controlled by instincts or drives. These steer behavioral patterns depending on circumstances.

Drives are created through evolution. Animals equipped with a successful instinct pattern succeed over those whose instincts are not as well matched to reality.

Some basic drives:

Lacking rational intelligence, all actions of more complex animals are controlled by an extensive system of instincts, with very few exceptions. At the bottom of the sometimes complex system of instincts are the basic drives, and more complex instincts build on them and control the actions of the animal through a web of reactions to various stimuli.

At some time around 4 million years ago a few animal species, namely whales, elephants and some apes, had developed large, capable brains, but not yet any sophisticated abstract, rational, manipulative thinking.

But then something remarkable happened. One particular species, a large ape began to evolve towards an ever larger brain. In the evolutionary short period of these 4 million years until now the brains of this particular evolutionary branch grew from some 300 to 350 cm³ to four times the size, around 1,300 cm³, of contemporary humans.

On this way the apes and humans suffered all kinds of disadvantages. Many other abilities were sacrificed. Modern humans no longer have much fur and their sensory abilities can no longer match those of many animal species living in the wild. Difficult pregnancies and very difficult childbirth were exchanged for this large brain. Why?

The answer can only be that the advantage of the larger brain outweighed all its disadvantages. And the advantage is the ability to think rationally and also to communicate the thoughts through language.

Around 4 million years ago the animal that was in the most advantageous situation, a large ape named australopithecus, began this new trend. This animal had a big, complex brain, good 3-dimensional vision and comprehension (due to moving in the heavily 3-dimensional labyrinth of tree branches), and hands that could grasp and manipulate things. Once brain complexity had reached a certain level, this animal could use its internal model of the surrounding world to plan actions and predict outcomes beyond the level of instinctive reaction.

But the large ape was lucky enough to have a second advantage over many other animals. He lived in social groups and hunted with his group, and therefore already had some of the same social abilities that similar socially hunting animals like hunting dogs, hyaenas, and lions have.

Therefore, closely following the development of ever better rational thinking, the pre-human and human brain also evolved the ability to use language to communicate the results of its thinking to others in his social group.

From that time onward every little improvement, every increase in brain size and performance, yielded a competitive advantage for this species over other species and also over competitors of the same species, and the race for higher intelligence began.

Eventually we humans evolved sufficient intelligence to conquer most areas of our planet, drive plants and animals out of our territories at will, and reshape our environment to our liking.

However, we are still to a large extent controlled by drives and instincts, some of which we call emotions. In stress situations emotions have a higher priority than rational decisions. We are controlled by rational reasoning only to the extent that strong emotions are not aroused.

Possible reasons why this combination of high priority emotions and low priority rationality has so far succeeded in evolution are:

The composition of human intelligence is heavily determined by biological and historical factors and by the characteristics of our planet.

While the word intelligence is used for all kinds of abilities in popular language, in the field of psychology and in this article a much narrower definition is used. A common definition used in psychology is this:

Intelligence is the ability to act purposefully in unknown situations.

A very much simplified definition is:

Intelligence is the ability to think logically.

Both of these definitions fail to mention the fairly obvious point that a higher intelligence can solve more complex problems and can also solve comparable problems faster.

The word intelligence is often used in a very unsharp way in casual speech and among non-psychologists. There are frequent attempts to water down the concept of intelligence, partly because people fear to be measured and classified. In this paper a most stringent definition is used, similar to that used in psychometry.

A new, more suitable definition of intelligence shall be introduced here:

Intelligence is the possession of a model of reality and the ability to use this model to conceive and plan actions and to predict their outcomes. The higher the complexity and precision of the model, the plans, and the predictions, and the less time needed, the higher is the intelligence.

[German translation: Intelligenz ist die Fähigkeit, ein Modell der Umwelt zu besitzen und mit Hilfe dieses Modells Handlungen zu planen und ihre Wirkung vorherzusagen. Je komplexer und wirklichkeitsgetreuer das Modell, die Planung und die Vorhersage und je kürzer die benötigte Zeit, desto höher ist die Intelligenz.]

This definition lends itself more easily to high artificial intelligence considerations, because we may have independent measures of model complexity and processing power and can thus compare machine intelligences without always having to use tests. The classic definitions are more universal and more direct. But they have the problem that we cannot measure or even estimate the intelligence without testing it. This definition was designed with the aim to estimate intelligence without subjecting it to a test, which may not always be possible. It can also be used to predict technical artificial intelligence before it actually exists.

A quantification of this definition has not been attempted, but the complexity of a model could be defined by counting its elements and applying some complexity measure to the system of interrelations between these elements.

The definition also points to two different dimensions of intelligence, because the speed is not necessarily linearly related to the other measures. For example, an intelligent being cannot necessarily solve a problem with twice as many elements in twice the time. Instead for each intelligent being problems can be found that this being cannot solve in any reasonable time or cannot even solve at all. This is also what we find when we look at human beings. Conversely, a low, but fast intelligence is conceivable and actually exists in computers around the year 2000.

The human brain is built such that typical stone-age problems can be solved reasonably quickly by most people, because evolution discriminates harshly against those who fail. The human brain achieves this by having a large number of brain cells (neurons) work in parallel. Evolution has not achieved higher speeds than about 200 switching processes per second on its biological basis, so parallel processing was its only recourse.

When we throw complex technical problems at the human brain, we can easily overwhelm it. A standard IQ test nicely illustrates the complexity limitations of the human brain. If the number of elements in typical IQ test problems is even moderately raised, the limits of human intelligence are exceeded very quickly.

We have devised IQ (intelligence quotient) tests for humans, which essentially measure a few areas of rational thinking like the ability to detect and use systems hidden in numbers (i.e. a certain mathematical prowess), the ability to recognize structures in symbolic systems, the ability to manipulate a system to achieve some aim (like three-dimensional rotation), and the ability to understand and select words precisely. Some IQ tests do not even test all of these areas, but we find that the results in the different fields are positively correlated, leading to the hypothesis of a general brain performance parameter like the g factor introduced by Arthur R. Jensen.

To measure intelligence, the intelligence quotient (IQ) was invented, which used to be the intelligence age of a child divided by its chronological age. For example, a 10 year old child that produces test results like the average 10-year-old is said to have an IQ of 100. A 10-year-old that produces test results like the average 12-year-old is said to have an IQ of 120. It was found that the IQ is roughly Gauss-distributed, i.e. follows a bell-shaped curve with most people in the middle and progressively fewer people far away from the middle of 100.

To expand the measure to adults, we now use a different procedure. We measure the rank and project it on the precise Gauss curve, such that the IQ is, by definition, Gauss distributed. Standard deviation is usually assumed as 15, such that about 2% of all people have an IQ of 131 or higher.

German 10 DM bank note with Gauss curve in the center

German 10 DM bank note, Gauss curve detail

The curve, however, gives no indication of actual brain performance. It is still only a semi-arbitrary transformation of a rank. An IQ test can only find out whether person A is more or less intelligent than person B but says nothing further about the actual thinking performance.

Intelligence varies widely among individuals. Some humans are highly intelligent and can solve problems and conceive ideas that other humans cannot. At the other end of the scale some humans are unable to fend for themselves due to lack of intelligence and have to live in closed institutions. Most humans are somewhere in between. A person with an IQ of 100 may well be able to use a computer, but not to create one, which requires higher intelligence and a lot of knowledge, too much to exist in just one person.

What we also find is that actual problem solving performance varies even more widely than the IQ figure appears to indicate. A person with an IQ of 100 can fairly easily solve problems that somebody with an IQ of 70 finds impossible to solve, no matter how much time he is given. Somebody with an IQ of 130 can, in turn, solve problems quickly that are absolutely too difficult for somebody with an IQ of 100. It seems that intelligence, or problem solving performance, is very unevenly distributed among humans, much less evenly than, say, body height.

For simplicity we will use the IQ here as a placeholder for brain performance, although the IQ measures only a certain fraction of total brain performance in humans. It does not measure other areas like musicality, emotional ability, creativity, dexterity, and many more.

Self-awareness (formerly called self-consciousness, but "self-conscious" has recently changed its meaning at least in American English to: shy, introverted, or autistic), is the ability of intelligent beings to possess a mental model of their social environment that includes themselves. In other words, self-aware beings can mentally rise above themselves, look down on themselves and contemplate their ambience, particularly their social group, including themselves. A self-aware being understands that he is nothing special, merely a being that is the same as another being of the same kind, of the same species.

Most animals don't have this ability.

A rough and simple test for self-awareness is the mirror test. A color blot is clandestinely put on the head of an ape or elephant, such that the animal cannot see it directly. Then a mirror is presented. A self-aware being understands that the image in the mirror is himself and reaches for the blot, if it has the means to do so (hand, foreleg, trunk) to find out what it is.

Most animals are unable to do this. They simply don't understand that they themselves are like others of their species and that the image in the mirror is themselves.

Another, similar, if cruel, test is to shoot an animal in a group or herd from a long distance. If you do this with an antelope, the neighboring animals will briefly become alert, then they may continue grazing, totally oblivious to the fact that their neighbor has just been killed. They simply do not understand the concept of death at all, and they also don't care whether their neighbor is sleeping or never stands up again. There are some exceptions with mothers caring for their young and species living in closely-knit family groups, but there is still an obvious behavioral difference between that and understanding death. Understanding that you yourself could die in the same way requires self-awareness.

Some social animals may show some care or irritation if a member of their social group does not move or is missing, but only a few species, like elephants or apes, will show full understanding that the other one has been killed. Their behavior is totally different from that of an antelope in the same situation.

A further ability is the possession of a reasonably precise model of one's own body, that, for example, enables us to discover a sucking mosquito and kill it by hitting it, or to point at a particular spot on our body and say that it hurts here. This is not trivial, as the location of the pain is felt internally, but has to be translated into an arm movement that reaches the same spot. The only way to achieve this for any non-trivially-shaped and articulate body is to have a sufficiently complex mental model of the body. Many complex animals, including all self-aware species, appear to have this internal model.

We can actually detect imprecisions of this model and measure them, for example by blindfolding somebody, poking him with a pointed object without leaving a visible trace, then taking off the blindfold and asking the person to point at the location of the sting without touching the skin. The distance between the actual sting point and the location the person points at is the sum of a few errors, including the model inaccuracy.

Along with it goes the ability to see or feel (in the sense of touching and feeling) oneself and the others, thus being able to find out and comprehend that "the others are like me" in the first place. This, along with being a social animal, seem to be primary prerequisites for self-awareness. On our planet all known self-aware species can both see and feel.

The other prerequisite is sufficient intelligence. A third prerequisite, at least on our planet, seems to be living in a social group. Apparently, before you can recognize that you are like John, you first have to understand that John is like Jack and that both are similar, but individually different beings. This can only happen if you live in a group with John and Jack and spend a lot of time with them. Otherwise the necessity to understand these social details would not arise in the first place and the abillity would not evolve.

A problem with this definition of self-awareness is that humans, without an analysis like the one here, like to see self-awareness as a mysterious, divine ability and thus tend to fall back into totally unfounded anthropocentrism. This often makes discussing self-awareness difficult. Humans simply don't like to hear that there isn't more to it. They want to be something special, something divine, and deny all other beings, particularly machines, this ability.

Around the year 2000 artificial intelligence still differs a lot from human intelligence. Machines can do some particular tasks, like finding a large prime number or searching a large database, extremely well, while they are still unable to perform typically human tasks, like household chores, or steering an automobile using visual clues only.

Computers can usually perform tasks that can be defined very precisely and that do not require a large knowledge base. Once they have the program, they can also repeat the task endless times with always the same accuracy and without tiring or losing interest. They can perform relatively simple tasks at extremely high speeds and with extremely high precision.

But when it comes to less precisely defined tasks like those usually faced by humans in their everyday life, computers aren't very good at them yet. This is partly because nobody has bothered to write clear definitions for the vast number of more or less trivial tasks performed by humans every day, and partly because we humans possess evolved structures in our brains that already contain the "programs" to deal with those tasks, along with all the needed background knowledge. These brain structures have evolved and been honed over millions of years by trial and error, and since they are readily available and don't even require high intelligence, our trucks are still manned by a human driver and our windows are still wiped by a human, rather than by a computer.

How could machines acquire the abilities that we humans already have? Several ways are conceivable.

We have to consider though that many of the tasks currently performed by humans may not be of much importance for machines. A mining robot has no need to pour a cup of coffee. It will only have to excel at the particular tasks it was built for. And if machines find it difficult to steer a car from visual clues, then some other technical means could be used that comes easier to the machine, like embedded signal wires or artificial surface reference points aided by precise satellite navigation.

The widely varying level of abilities like intelligence in the population is a fact often overlooked by futurologists who try to predict the future of intelligent machines. They are often concerned with the point in time when those machines surpass human intelligence, but forget to ask whose intelligence they mean. Assuming that artificial intelligence can be raised by building new, better machines, it is conceivable that we will one day have robots with an IQ of 60. It will be more difficult and take some more time to reach an IQ of 100 (always measured on the human scale), and it will be even more difficult to reach or surpass the intelligence of the most intelligent humans on earth.

While lots of robots with an IQ of 60 or 100 could be a tremendous boon to our well-being, as they could perform menial tasks that humans don't like to do, they would still be unable to design better robots on their own, as that requires a much higher IQ (although they could be of some assistance). It is therefore possible that we will experience a period of time in which humans coexist with somewhat human-like robots and make use of them without any direct threat from these robots to increase their own intelligence.

On the other hand, there are very high incentives for humans to increase machine intelligence, as more intelligent machines could tremendously boost our material base and increase our well-being by working for us. Autonomous robots mining the bottom of oceans, spacefaring, mining the moon or other planets, and particularly military applications will greatly benefit from higher intelligence, so it is likely that humans will keep trying intensely to raise the level of artificial intelligence.

Computers in the year 2000, even most existing robots at the time, are usually immobile, unless they are built into a vehicle or carried around. Industrial robots can move their arms, but they cannot walk around. However, this limits their usefulness severely.

There is one significant exception-military robots, like cruise missiles. Here the ability to move is so essential to their purpose that their designers had to look for ways to mobilize them.

In most applications it would be a boon if a robot could move on its own, provided that it does so safely. For many future applications, like exploration, mining, building, or cleaning, mobility is indispensable. Hence many intelligent machines will become mobile and will become full-fledged robots.

While human tasks like walking or running are still daunting for machines, it will not take long until this hurdle is overcome, as the problems are always the same and of limited complexity, so standardized solutions can be developed. It seems that the problem of motion is easier to solve than the problem of high intelligence, because a technical solution, even if its development is very expensive, can be copied and reused for a whole range of similarly moving robots.

In fact, the main problem is not the movement itself (witness how easy it is to build a remote-controlled toy car), but the decision where, and where not, to move, i.e. navigation. However, we have some solutions already that work quite differently from the original human ways, like ultrasound and radar for distance measurement and collision avoidance, bar codes to recognize things or locations, and, outside buildings, satellite navigarion, i.e. GPS (Global Positioning System).

There are two conceivable ways to achieve hyperintelligence. One, the genetic way, is to apply intelligence to our own genes and improve them. The other, the technical way, is to start with computers of year 2000 style and improve these. Which way is more likely to yield results quickly?

We live in a rich universe, in which even the small subset of physical possibilities we have discovered until now contain mind-boggling possibilities way beyond what nature could utilize through trial and error. This is why we etch the plans for our computers into silicon chips and not into gray cells. This is why we carry mobile phones, rather than change our genes to grow antennae on our heads. By applying our current intelligence and the scientific method to the world, we could easily surpass most paremeters of a human or an animal, with the few exceptions that living beings can survive on their own, that they can replicate themselves, and that humans and some other species are intelligent. Apart from these things that are apparently hard to achieve with machines, we can use machines to harness very high amounts of energy, we can process information, and we can use these two to achieve superhuman performance in various fields like moving and communicating very fast, building large structures, etc.

In short, we could achieve with machines many things that nature could not achive on the evolutionary, biological path. Evolution is limited to work in small, random, and therefore unintelligent, steps. Evolution could not manage to dissociate living beings from certain carbon-based materials like proteins, because something like a silicon chip could not be reached on any evolutionary path, at least not on earth.

Another possibility should not be forgotten: hybrids. The possibility will exist to have humans with high-tech implants, genetically modified humans with high-tech implants, or robots that contain a human or just his brain, with or without genetic modifications. Hybrids may not be a long-term solution, but they may be a useful intermediate step.

Yet another kind of pseudo-hybrid would be a machine that contains not a human or his brain, but a functioning copy of a human brain on a technical substrate, an uploaded brain. If the uploaded brain copy acts alone, then that would be a human in a machine body. If, however, the uploaded brain copy is supplemented with artificial intelligence, then this would be another form of hybrid.

When we look at contemporary computers, we do not notice instincts or emotions in them. Computers around the year 2000 seem to be purely rational. On their own, they do nothing. Only when we give them instructions, they "come to life", and only to obey the commands they were given. After they have performed the task they were given, they go into an idle loop, something akin to dozing in animals or humans.

Some computers perform self-tests or do some internal housekeeping while idling, which reminds us of a cat licking its fur when idle, but such activities have a very low priority and cease as soon as the computer is given a new problem to solve.

If we look at the computer as a black box and judge it only for what it does, this could be described as the computer having the instinct of a perfect slave, plus perhaps some tiny self-preservation instinct.

It is important to realize that instincts and intelligence are orthogonal to each other. Primarily they don't depend on each other, unless so designed. It is theoretically possible to build instinctless robots that are nonetheless highly intelligent, or robots that follow an instinct, yet have little or no intelligence. The theoretical difference between instinct and intelligence is that the instinct is a preprogrammed pattern of reactions to stimuli, while we speak of intelligence when a robot or living being makes use of a complex model of reality that also includes itself, i.e. self-awareness.

Assuming that we will one day be able to create truly intelligent computers, is a computer conceivable that has superhuman intelligence, yet no instinct other than to be a perfect slave? Yes, because all we would need to do is to raise the intelligence of our computers while not instilling any undesirable instincts into them. This seems possible in theory, but is it possible in practice in the long run?

What instinct or drive would machines need to become a life form? Does hyperintelligent artificial life need any instincts at all? Yes, because otherwise it would be inactive and probably eroded away over time.

One of the major characteristics of life is its expansion. When we find a life form in some place, then we can assume that it must have had at least one basic drive-expansion, because otherwise it would not have expanded into that place (unless it evolved right there and never moved).

In other words, if we imagine two life forms, one of which, A, is expansive, the other, B, isn't, then we can expect to meet A in various places, while we cannot expect B anywhere except perhaps still at its place of inception. Given that the evolution of life from dead matter is rare and happens only in suitable spots, we can safely assume that all life we will ever meet is expansive.

If the crucial characteristic of all life is expansion, does life necessarily need any other aims to fulfill its drive to expand? This depends on how we define life. If we call everything life that spreads out and changes dead matter into something that spreads, then all other aims or drives or instincts can be derived from that and seen as serving only the purpose of expansion. For the purpose of this paper we will use this wide definition of the word "life". If this doesn't coincide with your use of this word, you may substitute "life in the widest sense" or "expansive agents" for the word "life".

A highly intelligent being may not need any instincts at all to be called a life form, except the will to expand, because it may be able to make all other decisions rationally. For example, since harm to one such being would hamper its expansion, the being would probably quickly come to the conclusion that defending itself from such harm promotes expansion. Therefore, if rational thinking could be done quickly enough, the being does not need a separate self-defense instinct.

To give an example, when I notice an object flying towards me, I will instinctively make an evasive movement like ducking down or protecting my face with my hands. The reason for the existence of this instinct is that a purely rational approach would be too slow. A highly intelligent being could, however, measure and calculate the trajectory, determine the place and time of impact, then calculate the optimal evasive action. This would be much better, but the human brain is too slow for it. A future hyperintelligent robot's "brain", however, may well be able to go the rational route with excellent success.

This is not to say that hyperintelligent beings will not have any complex systems of social, moral, ethical, or legal rules. In fact it appears likely that they will, just like we humans developed such systems to our great advantage. However, they may not have to perpetuate our archaic rules that serve only to contain and channel our stone age behavior in a modern civilization.

The following graph shows possible relations between instinct and intelligence in three different life forms. Hyperintelligent artificial life may only need some very basic drive, like the will to expand, and may use its intelligence for all other activities.

The picture below shows, very much simplified and not to scale, three phases of intelligence growth. The first phase is the evolution of animals, during which intelligence remains very low. In fact, we don't speak of intelligence in most animal species at all. All their achievements are produced by instincts.

Growth of animal intelligence, human intelligence, and artificial hyperintelligence

(not to scale)

Starting at the first sharp bend in the curve, the second phase, which has lasted about 4 million years, is the evolution of human intelligence.

It also saw strong intelligence growth in a few other mammals like elephants, who were a bit less lucky than ourselves with their speed of intelligence growth, although elephant brains, at a volume of 2 l, are considerably bigger than humans'. It appears though that elephants evolved their excellent memory even more than their intelligence and so fell behind humans, perhaps because they have always lacked 3-dimensional sight and the dexterity of hands, which their trunks cannot quite match. Nonetheless they are living proof that nature does evolve intelligence wherever possible, not just in humans.

This second phase begins when the brain of one animal can hold a sufficiently complex model of the surrounding reality to be able to benefit from preconception and planning, leading among other abilities to tool making and technology. As soon as mother nature discovers that raising intelligence yields more success than improving other abilities, the race for higher intelligence begins and brain size increases unusually quickly, at the cost of losing other abilities and even at the cost losing lives in childbirth. Indeed we humans have lost the fur, the ability to climb as well as our distant forebears, the big teeth, the strength (compare yourself to a chimpanzee who weighs 70 kg, but does not have the slightest difficulty to do one-armed pull-ups all day), the sharp senses, etc. At the same time, along with a rise of intelligence to the current levels, the cranial volume has tripled, a most unusual speed for evolution. The few things we appear to have gained beside intelligence is manual dexterity along with walking, running, and some more body height. The driving force is still evolution through purely Darwinian selection. As astounding as the result may be, it is still held back by the fact that the evolved intelligence cannot be applied to itself. In other words, the master plan, in our biological case the genes, is only extremely slowly improved through trial and error.

Beginning at the second sharp bend in the curve, the third phase begins when, at a certain level of intelligence and civilization, it becomes possible to let intelligence work on itself, either by improving its own plan (the genetic way) or by creating an entirely new plan (the technical way). The latter new plan could be the circuit diagram of a computer or lines of program code. When this happens, the speed of intelligence growth will increase enormously, such that this newly increased intelligence surpasses human intelligence levels very shortly thereafter. We are now very close to this point.

How can we imagine artificial hyperintelligence? Will it appear more like a human genius or more like a speaking computer?

We have already achieved superhuman artificial intelligence, but only in very limited areas like playing chess. Also, computers easily outperform humans when it comes to certain tasks like calculations or symbolic manipulation. Whenever a certain task is simple and repetitive enough, so we can write an efficient computer program for it, the computer will outperform us.

Computers around year 2000 did not yet have the same performance as the human brain. From the number of neurons and synapses in the human brain (roughly 100 billion neurons, roughly 1,000 synapses per neuron) and their speed (200 Hz) Ray Kurzweil estimates that, assuming Moore's law will still hold, the first supercomputer will reach human brain performance levels roughly some time around 2010. Some 10 years later, small, personal, low-price computers should reach the same level. Around 2050 a single supercomputer will reach the performance of all human brains on earth.

Will Moore's Law continue to be valid? We cannot know for sure, but whomever you ask in the semiconductor industry, the answer always seems to be, yes, as far as we can see, it will. For the next 10 years the validity of Moore's Law is almost guaranteed, because research results are already there, and all that's needed is the industrial application, which is not in doubt. It would be very astounding if progress in this field ran into a wall and came to a sudden halt. No researcher seriously considers this possibility, because even if one particular technical path turned out to be a dead end, there would still be many others.

But does high data processing performance always lead to intelligence? Not necessarily, but we have two good reasons to assume that very high performance will assist us in creating genuine intelligence in two different ways.

First, raw performance translates into intelligence in "brute force" algorithms (simply trying and calculating a large number of possibilities). In certain areas, for example, chess playing, brute force can easily be more successful than the human way of thinking. Given enough performance, many more areas could be covered by this relatively simple method.

Second, with rising performance computers will assist their own programming, and later, as they gain the ability to understand written human language, increasingly program themselves. As soon as we accept that in the computer and software industry the structural and programming complexity of computers will grow along with raw performance, it follows that intelligence will rise and eventually reach and exceed human levels.

As the intelligence of even small, cheap computers grows, we will see more and more robots. Initially they will do relatively simple tasks like mow the lawn, vacuum-clean a room, or steer a military air drop parachute precisely to its predetermined destination with the help of a precision navigation system like GPS by pulling on two ropes like a paragliding human. All of these already existed by the year 2004.

According to The Economist 2004-10-30, p. 114, worldwide investment in industrial robots grew by 19% in 2003. Japan leads the world and installs 31,600 new robots to have 349,000. Sales of robot vacuum cleaners and lawn-mowers are throught to be running a nearly 1 million a year.

As the intelligence increases, we will see more robots, also smaller ones, performing increasingly more sophisticated tasks, in the industry as well as in the home, in agriculture as well as in the military. Most easily this can be done when the robot simply has to fulfill his task, no matter what, doesn't have to be very careful with anything, and where the task allows for a certain failure rate. Many of these are military tasks, like small vehicles that automatically fly or drive to a predetermined destination and gather information or deliver a payload.

A problem is that such robots could do illegal things, particularly transporting illegal wares. It may become difficult for law enforcement agencies to keep tags on swarms of small robotic planes transporting illegal drugs from one country to another, possibly over very long distances, perhaps flying very low, and each landing in a different place that is not known to anybody but the few people who program the plane and await it at its destination. Other illegal activities performed by robots are easily imaginable.

But the socially explosive question is simply: When will robots be cheaper and more intelligent than any significant slice of the human population?

Some time before the computer Deep Blue beat the World Chess Master Garry Kasparov in a controlled, fair turnament, many people said that chess requires intelligence and can therefore never be played well by a computer. In fact, some people said that playing chess well is the ultimate proof of intelligence.

Now that the world champion is a computer, people have changed tack and state that the machine isn't intelligent at all and that chess doesn't require true intelligence. We will experience this redefining ever more often, as computers master one previously human task after the other, and these will be indications that computers are making progress.

One argument we will hear is that computers master certain tasks (including chess) not through intelligence, but through the application of "brute force" algorithms, and that this does not count as intelligence. This means that computers can use simpler algorithms than humans, because they are so much faster. To give a simple example, to find the sum of all numbers from 1 to 1000, a human will have to resort to algebraic methods, while a computer can simply add them and still be finished in virtually no time.

The problem with this line of argument is that ultimately it is the result that counts. When the speed and performance of computers will keep rising over the next decade or two, they will be able to achieve more and more intelligent results without having to apply human-like thinking structures.

This effect alone may not achieve hyperintelligence quickly, but it makes the task considerably easier.

Another effect we will observe as machine intelligence rises is that humans are taken out of the loop in technological developments. Already now large parts of the design process for new computers are performed by existing computers, partly because humans could not possibly do the tedious tasks of designing multi-layer printed circuit boards or ever more complex processor chips.

A problem with this is that humans not only get rid of the tedious work. The other side of the coin is that we also lose opportunities to make decisions.

The other possible course of development is the genetic path. Rather than designing intelligent machines, we could instead opt for increasing intelligence in human brains by altering our own genes.

Is this the likely path to the future? It has the obvious advantage that we can build on what we already have (or are), but it is fraught with a number of difficulties.

Also, we live in a world whose physical characteristics offer an incredible richness of useful properties. As science progresses, we find more and more opportunities to perform tasks way beyond all biological possibilities.

To give just one illuminating example, it is now technically possible to transport the entire current data flow of an industrialized country like Germany in the year 2001, some 100 Terabits per second, through a single glass fiber strand.

Viewed in this light it appears extremely unlikely that the biological path, the only one nature could find by trial and error, should be the most promising for all times.

Because of the superiority of the technical alternatives the biological course of action seems less likely even for the near future. It could well happen in the very near future to some degree though, and it is conceivable that some brain structures and contents could be copied and uploaded into a machine.

Nature's only way to procreate is for some offspring to grow in or on one individual, then at some time split away and become autonomous, more or less like a copy of the parent or parents. Nature couldn't invent other ways, but we can.

There is no need for each robot to have the complex ability to create another robot. Robots can be built in factories, employing special machines that are better suited to the task.

Our current advantage is that we and our robots are no longer limited to the forces of evolution, trial and error, to improve the design of future intelligent beings. We can now apply all existing intelligence to the design and construction of better systems and are not bound by the rules of biology or evolution. We can explore models that nature could not possibly invent. We can take any shortcut and construct entirely new designs that have never existed before.

Most human knowledge is stored in writing. Libraries full of books contain what we have found out about our world and ourselves so far. These books are written in human languages and some variations like mathematical, physical, chemical, or other special notations. Some of them contain illustrations.

If computers could read all this, they could acquire all the accumulated human knowledge in a short time. If they could understand its meaning, they could make use of it and put themselves into a situation that is superior to that of the best human scientists, because they are not burdened with the limitations of the human brain like slow processing speed and limited memory.

Therefore the next major step will be that computers acquire the ability to understand human language. The degree of understanding will vary. Initially, computers will have the vast amount of accumulated knowledge in our libraries at their disposal, but their ability to draw conclusions will be limited.

However, as this ability rises, a virtuous circle ensues. Understanding one part will help to understand the next part, and so it will only take a few years from the first beginnings until we will see computer systems of which one could say that they know everything humans have ever known (and written).

Initially this will be paired with low, limited intelligence. We will see computer systems that know very many facts, but cannot draw complex conclusions from their vast knowledge. They will appear like idiots savants, citing scientists and artists, but still acting clumsily, remotely comparable to a human with an IQ of 60 paired with an enormous photographic memory.

However, as processing power and the accompanying intelligence keeps increasing, the huge knowledge will make these systems extremely useful and powerful. We will observe (or, rather, see to it) that these systems rummage the texts of all libraries in the world attempting to make sense of what they read. They will have to learn to distinguish between different contexts, fact and fiction, science and pseudo-science, precise and imprecise, true and false. They will have to grasp rules and exceptions, areas of validity, the meaning of context. In short, they will have to learn all the intricacies of natural human language.

The result, however, will be nothing short of a revolution. Over a phase of just a few years at least two human professions will rather suddenly disappear as a result:

In a way a coder is a translator, only that the target language is not human, but a programming language specifically designed to be understood easily by primitive computers.

We will still need software designers for some time, as initially the computers will lack the intelligence and creativity to set directions and conceive of new applications.

We may also see the advent of entirely new languages that dispense with the biological and historical ballast we have to tote around in our biological brains and instead acquire much higher degrees of precision needed to tackle increasingly complex problems. They will be the real next generation computer languages and will be used by computers as their own means of storing information, with human languages serving only as a "user interface" for humans.

At some time roughly around 2010 the first computers will be able to read and understand written language well enough to process most literature, especially technical and scientific literature, and create huge knowledge databases. This will suddenly bring the knowledge of computers from almost nothing to the accumulated knowledge of mankind, because many books, especially those in the fields of science and technology, are already present in computer-readable form and stored in electronic libraries.

Initially, understanding language means nothing more than to be able to parse the sentences, recognize the language structures, and store this information in a form that can be evaluated and queried. With rising machine intelligence, more and more of this knowledge will be fully understood, in the sense that the full meaning of texts can be derived, reformulated, and utilized.

This, in turn, will lead to a revolution in computer programming. For years there has been talk about the current software crisis, which is another word for the lack of high-quality programming capacity. Around year 2000 we are in a situation where even programs that perform relatively simple tasks, such as word processing programs that handle formatted pieces of text, cannot be programmed in good quality. For example, the entire functionality of a program like Microsoft Word is relatively easy to understand for a programmer, but, when put under load, the program simply fails. Functions work one by one, but fail when combined, or the program simply crashes when facing a moderately larger amount of data or a document of moderately higher complexity. When confronted with a truly large amount of data or a high degree of complexity, though well within the confines of year-2000-style computers, like a document with thousand pictures, some nontrivial structure, altogether a gigabyte of data, the program almost certainly fails.

Large programming projects often fail. We are currently unable to write programs beyond a certain complexity. Core programming teams that write the main, unsplittable functionality of large programs often consist of no more than 5 people, because adding more people increases the communication problems such that they eat up all that is gained by adding people to the team.

The reason is that programmers around the year 2000 still have to deal with the finest details, with each and every kind of bit and byte that is processed by the program. Programming languages like C++ are no more than slightly better assemblers and provide only a thin layer to shield the programmer from the gory details of machine language. We do not have any truly high-level programming languages in the year 2000. Instead, programmers are facing ever more unwieldy libraries of accumulated computer code that they can try to learn and use, but this kind of reusing code does not take us very far either, for various reasons.

As soon as natural language can be understood by computers, and tools are devised to make this knowledge usable, programmers no longer have to use arcane programming languages, and especially they no longer have to define problems down to the last point. Instead it will become possible that a problem is coarsely defined, and the computer can fill in the blanks with knowledge gleaned from the knowledge databases that will have been created from scientific, technical, and common literature. Every step in this direction will immediately increase programmer productivity, which is one of the severely lacking resources around the year 2000. Many people who would not have the talent or patience to learn a programming language, can then design computer programs by describing the problem in natural language and employing dialogs with the computer in which the machine fills in the details and clarifies ambiguities in cooperation with the human designer.

For this to work, computers will still not need a general IQ beyond 100 on the human scale, but comparing computers to humans may become increasingly difficult as computers could have extremely partial, narrow strengths and weaknesses, compared to humans. Assigning one general IQ to a computer may be misleading or entirely meaningless.

After the first step, acquiring human language, the next most important task, perhaps the only remaining one, is to raise intelligence.

Currently the intelligence of computers is somewhat different from that of humans. Unlike humans, computers can do any task, once they have been taught, extremely well and extremely quickly. Conversely, they are unable to perform even a simple task they have not learned. One could say that their intelligence is low, but quick, and they are extremely good and reliable learners and repeaters.

In the future, ways will be found to enable computers to do any task somehow, even if they cannot do it very well and even if they have not thoroughly learned it. We can expect the advent of computer systems that show surprisingly high abilities in certain special fields, but are still unable to do things well that come easy to humans.

During this phase it will be extremely beneficial to pair the different abilities of computers and humans. In fact we observe this already around the year 2000, with extreme specialization on the part of computers. In the future computers will increasingly understand everything we write to some extent, but will still have areas of expertise, inside which we will gladly leave tasks to them in the sure knowledge that they can do it better than we can, and other areas they cannot cover yet and in which humans have to provide guidance at least.

Early examples will be:

We may see computers that can process large amounts of knowledge and can successfully test, prove, disprove, or find the likelihood of truth of, hypotheses whose complexity goes beyond what a human or a team of humans can comprehend.

Later we will see robots that are quite human-like in many aspects, but different in others. They will be human-like in those aspects that are needed for human-like tasks, but they will be different from humans when better non-biological and non-evolutionary ways are available. A simple mechanical example is that they could move on wheels, rather than on legs (although legs have their advantages too, so some robots will need them).

Another example is that robots could use different means to the same end. For example, while we humans learn by trial and error how to pour a cup of coffee and never achieve a very high reliability, a computer could run a million simulations in a fraction of a second and thus solve the problem by brute force, then pour the cup of coffee much faster and safer than any human could.

We will be able to control robots by talking to them. When they don't understand us perfectly, they will ask for clarification, just like a human would, who hasn't understood a sentence. Initially some of the required clarifications will amuse us, because we will keep discovering how imprecise and ambiguous natural language can be. As machine intelligence rises, however, they will increasingly be able to second-guess us and make their own, sensible decisions.

"James, please bring me a cup of coffee."

"Big or small, sir?"

"Hmm, OK, bring me a mug."

"Please confirm that I shall prepare and bring a mug containing .4 litres of hot coffee including the usual concentrations of milk and sugar."

"Ah, try a bit more sugar than yesterday."

"Raising sugar concentration by 20%."

"OK."

"Thank you, sir. I will begin now."

As intelligence rises to human levels in more areas of thinking, we will observe that machines can be devised that master many everyday tasks like the home servant work above or driving a car. Computers or robots will be far superior to humans in a growing range of tasks, yet still far inferior in others, because of the different structure of computer intelligence.

Computers or robots will gradually occupy profession after profession and take over all the jobs they can do better than humans, i.e. all those that do not require abilities that computers have not yet mastered. In other jobs, like science and technology, we will see teams of humans assisted by a large variety of computers and, increasingly, robots.

Another possible path of development is that human brain content or structure is copied into machines. It is conceivable that we design machines that behave like humans or "upgrade" or "extend" humans with machine parts, especially with non-biological intelligence.

As all these developments happen and new machines fill jobs fomerly occupied by humans, we will observe the massive structural joblessness that has been predicted for quite some time, but actually occurred only in a limited way so far. While some intelligent humans will still be needed to direct and control computers and robots, less or differently talented people will lose their jobs in unprecedented numbers, causing high social tensions. Both manual and mental kinds of work will be replaced. We should already see clear signs of this around the year 2010.

We commonly imagine robots about the size of humans, often, especially in science fiction horror movies, even bigger, but this may be a mistake. The size of a human is partly determined by the required size of his brain. Nature has not invented any way to make a brain cell smaller or more powerful, so for a certain intelligence one needs at least a certain brain size. An ant cannot have the intelligence of a human, because it simply cannot have enough neurons in its tiny brain.

Humans can only achieve their intelligence by having a brain with a volume of more than one liter. Insects, for example, can be a lot smaller, but only at the cost of not being highly intelligent. Nature has been unable so far to condense rational thinking into brains of the size of, say, a mouse, not to mention an insect. The few other animals that have a certain degree of rational intelligence, apes, elephants, and whales, are all big. An elephant brain has a volume of about 2 l.

However, the size requirements for machine intelligence are very much lower, for two reasons.

This means that there is a possibility to build robots that are very much smaller than humans. Around the year 2000 we call the creation of very small machines "nanotechnology", and research is well underway.

Where are the limits? Our first forays into nanotechnology indicate that machines are quite conceivable in the scale of molecular or atom sized elements. This would mean that machines can be built, whose "brains" are more powerful than current computers, yet so small that they are the size of small insects. To achieve macroscopic effects, large numbers of such nanorobots could be used wherever they have some advantage over larger robots.

The fundamental shift to a high-technology based economy will have profound consequences even in the near future, like the next 10 to 20 years.

We will no longer have anything like a balance between power blocks. Instead we will have one center of power being superior to all others, simply because of technology. A power block, like a country or nation or group of countries, that can create and master the most advanced technology will be superior to all others, not just economically, but also militarily. The outcome of a war between such power blocks will be obvious before the war begins, so we will not fight all-out wars between technologically advanced nations in the first place. Instead technologically superior nations will increasingly influence, and we hope not dictate, what others do.

We saw an almost funny expression of this trend in 2002, when Europe tried to establish a competitor to the American Global Positioning System (GPS), which is a system of some 30 satellites and some ground stations providing position information to small and cheap GPS receivers, accurate to a few meters for civilian receivers and even more accurate to military receivers permitted to decode military enhancement signals.